使用git submodule实现代码权限管控方案

1、问题及目的

“程序A” 是一个 多模块 的Spring Boot 项目,为了防止代码泄露,需要加强对代码的管控。

2、解决方案

针对代码可能存在的泄漏风险,现提出两种解决方案:

- 将现有 “程序A” 后端的代码按模块分拆成一个个项目,相关人员只能拥有其权限内的项目(模块),不能接触到其它项目(模块)。该方案成本低,操作简单,虽然降低了整体代码泄漏的风险,但是模块代码泄漏的风险依旧存在。开发人员现有电脑配置不变,但是电脑需要进行域控管理。

- 在IDC配置开发服务器,为每个开发人员配置

16G内存、4核CPU、80G硬盘的Ubuntu虚拟机,同时更换开发人员现有的办公电脑,降低电脑配置节省电脑租赁费用。所有开发人员的开发工作均通过浏览器访问各自的开发虚拟机的开发工具进行开发,开发人员无法直接接触代码文件。该方案大大降低了代码泄漏的风险,但是实施成本高。为了防止办公电脑私自安装盗版软件,可对办公电脑进行域控管理。

2.1、模块拆分方案

“程序A” 后端模块拆分在保证开发人员权限管控的前提下,考虑开发人员操作习惯及开发效率。

经综合考虑,在不破坏 “程序A” 后端代码结构的前提下,使用Git submodule功能实现开发人员权限管控。

前端代码暂时不考虑进行权限管控,凡是前端开发均拥有整个前端代码的修改权限。

图1 “程序A”后端拆分示例图

拆分方法:

- 将”程序A”后端除

imes_common,imes_common_model,imes_eureka三个模块外,其余模块按整个模块一个项目移到同imes-parent同级的目录。移除多余模块的imes-parent项目变成imes-application项目。在Gitlab中新建imes-application项目,将本地imes-application所有文件上传到Gitlab中。注意:不要删减任何文件。

- 在Gitlab中新建

imes-dev项目,将整个移动到外部目录的imes_dev文件上传到imes-dev项目中。注意:不要删减修改任何文件。

- 按照

imes_dev步骤,对其它模块进行相同的操作。

imes-application模块所有开发人员均有权限访问;其它模块按管理要求分配相应的开发人员。

总结:整个”程序A”后端拆分不涉及对文件的删除修改,拆分过程方便快速。

开发人员开发方法说明:

情形:开发人员A拥有imes-application和imes-system两个项目的权限,但是没有其它模块的权限

- 开发人员A使用新账号将

imes-application克隆到自己的开发电脑上。

- 开发人员A进入到

imes-application文件夹,然后使用 git submodule add http://url/user/imes-system.git imes_system 命令将imes-system代码拉取到本地imes-application目录中。等待编译器索引完成即可正常开发调试,使用方法同现有后端开发方式。

2.1.1、模块拆分举例

本案例已”程序B”拆分为例,为了方便起见,整个”程序B”只保留四个模块:imes_common,imes_common_model,imes_eureka,imes_system作举例说明。

1、将”程序B”按照前文所述的方法拆分成imes-console-common,imes-console-eureka,imes-console-system三个项目。每个项目文件均不要进行修改删除操作。imes-console-common包含imes_common和imes_common_model两个模块。

图2 “程序B”拆分示例

2、将imes-console-common项目添加system和eureka两个submodule后并提交后,将在imes-console-common项目中看到两个子模块信息。

图3 添加子模块后项目信息

3、开发人员A拥有imes-console-common及imes-console-eureka两个项目的权限,虽然他可以看到项目中imes_system目录的显示,但是当他点imes-console-common项目的imes_system时,会提示找不到相关页面从而达到代码管控的目的。

图4 项目内代码管控

2.1.2、Jenkins打包

由于”程序A”项目整体结构并没有变化,Jekins打包方式基本不变,不过有两处变化。

1、Jenkinsfile中新增一行:sh 'git submodule update --init --recursive'

图5 Jenkinsfile修改

2、Jenkins中项目打包配置修改,如下图:

图6 Jenkins中打包配置新增子模块选项

图7 Jenkins打包测试

2.2、方案1说明(模块拆分+本地化开发)

1、开发人员保持现有开发环境不变。

2、管理人员将”程序A”后端代码按照模块进行分离,每个模块即是一个项目。

3、管理人员对项目(模块)进行授权,每个项目只授权给对应的开发人员,其他开发人员无法查看、下载。

4、管理人员取消开发人员原有”程序A”后端的代码权限。

5、开发人员拉取各自项目的代码到本地笔记上进行开发。

6、开发人员电脑需要进行域控管理。

图8 本地化开发

2.3、方案2说明(模块拆分+服务器化开发)

1、运维人员根据需要购买”程序A”开发服务器。

2、运维人员配置开发服务器(系统选用Ubuntu20.04 LTS桌面版,管理员权限禁用SSH),安装好开发工具IDEA Projector,Code-Server。

3、管理人员将”程序A”后端代码按照模块进行分离,每个模块即是一个项目。

4、管理人员对项目(模块)进行授权,每个项目只授权给对应的开发人员,其他开发人员无法查看、下载。

5、管理人员取消开发人员原有”程序A”后端的代码权限。

6、运维人员收回开发人员32G内存的机器,改成租赁16G内存的机器。

7、运维人员给每个开发人员分配开发虚拟机,以及浏览器访问链接。

8、运维人员搭建开发k8s,部署”程序A”。

9、开发人员在办公电脑的浏览器上进行开发工作。如果人员居家办公需要使用VPN连入公司内网进行开发工作。

图9 网页版IDEA

图10 浏览器上进行后端开发工作

图11 浏览器上进行前端开发工作

图12 开发服务器安装的系统(注:开发人员无法访问服务器,只能通过浏览器访问开发工具)

图13 服务器化开发示意图

图14 开发服务器网络说明

2.4、方案3说明(模块拆分+服务器化开发)

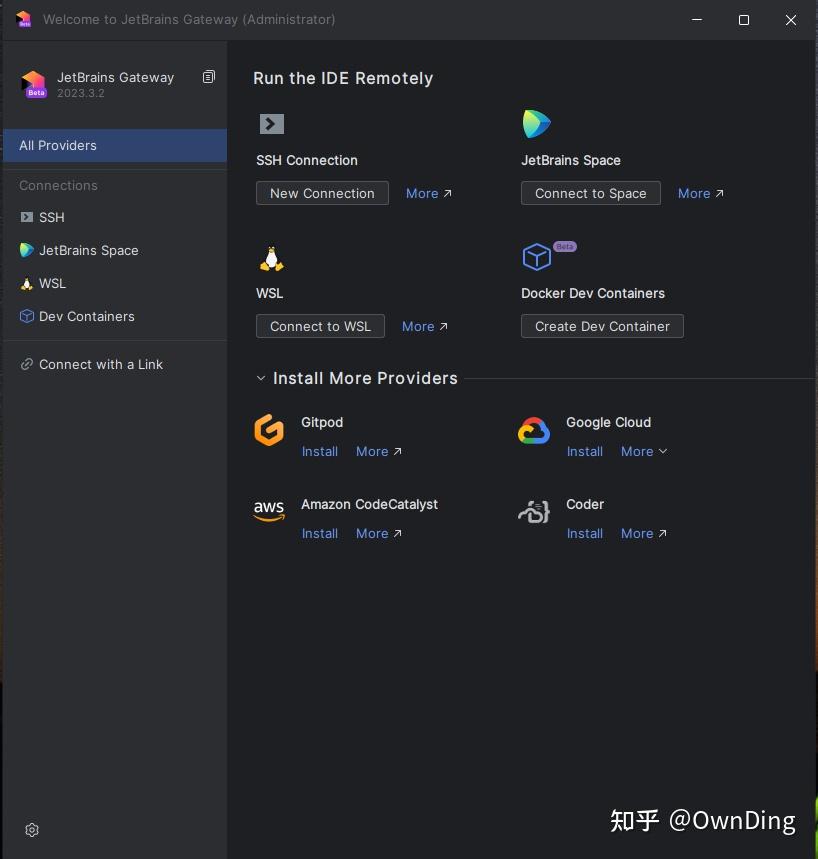

同方案2(需要启用SSH),将Projector替换成 JetBrains Gateway,实现相同的效果。使用Gateway时需要在本地电脑安装该软件,使用SSH连接到远程电脑。平常开发同本地使用IDEA一样。

前端开发采用VS Code Remote的开发方式。开发人员同样使用本地VS Code编辑器SSH方式连接到远程电脑。

下载地址:

JetBrains Gateway - Remote Development for JetBrains IDEs

本方案同方案2一样不会将远程代码复制到开发人员电脑上。

要求:

- 需要运维人员事先在服务器中配置好

Git,Java,Node.js等环境,并且将特定开发人员的Git密码事先登入。

- 需要运维人员在开发人员电脑上实现配置好Gateway及SSH登入密码。

- 服务器账户密码、

Git账户密码不告知开发人员。

- 代码服务器需每天备份。

- 开发人员一人一台远程服务器。运维人员在给开发人员配置好系统环境、开发环境后需要对服务器进行快照备份,在出现意外时可快速恢复开发环境。

- 运维人员需制作基础服务器镜像或快照,可以在开发人员入职后快速的部署开发环境。减少人员搭建开发环境的时间或者更换电脑重新搭建开发环境的时间。

图15 Gateway远程开发

图16 同本地开发体验基本一致

图17 可同时打开多个IDEA窗口

2.5、方案4说明(服务器化开发)

同方案3,但是不进行模块拆分(即”程序A”后端仍旧是一个工程,不按模块进行工程拆分了)。这样的好处是节省了模块拆分的麻烦、消除了模块拆分后开发人员隐形增加的调试程序的时间成本,同时开发体验同现有开发人员习惯完全一致。

图18 不拆分模块,远程流畅调试”程序A”后端

3、方案对比

|

|

|

|

|

方案1(模块拆分+本地化开发) |

方案2/3 |

方案4 |

| 安全性 |

中 |

高 |

高 |

| 方案实施便利性 |

高 |

低 |

中 |

| 花费 |

低 |

高(按30 ~ 40开发人员统计,预估服务器花销6 ~ 10万,同时电脑租赁可节省2万/年) |

高(按30 ~ 40开发人员统计,预估服务器花销6 ~ 10万,同时电脑租赁可节省2万/年) |

| 开发便利性 |

高 |

中 |

高 |

| 开发工具费用 |

无(但是IDEA、数据库工具很多是破解版) |

无(IDEA社区版、Code-Server、Ubuntu系统均免费) |

可能需要付费IDEA工具 |

| 办公/开发电脑域控 |

需要 |

不需要 |

不需要 |

| 居家办公 |

支持 |

支持 |

支持 |

| 出差 |

支持 |

支持(需网络) |

支持(需网络) |

| 开发工具插件支持 |

支持 |

支持 |

支持 |

| 开发环境统一性 |

低 |

高 |

高 |

| 风险点 |

开发工具使用了很多破解软件 |

大量人员在服务器上开发可能导致服务器卡顿,影响开发效率;需要高速的内网网络。网络延迟等会影响开发效率。代码等均在服务器,如果服务器突然故障等会导致开发人员无法工作。 |

大量人员在服务器上开发可能导致服务器卡顿,影响开发效率;需要高速的内网网络。网络延迟等会影响开发效率。代码等均在服务器,如果服务器突然故障等会导致开发人员无法工作。 |

4、方案选择

方案1相对其它方案安全性较低。方案2使用Projector操作流畅度不行,相比方案3、4操作不够流畅,体验感不佳,多IDEA窗口操作繁琐。综合方案1、2、3、4,建议选用方案3。